Gradient boosting¶

You may recall that we last encountered gradients when discussing the gradient descent algorithm in the context of fitting linear regression models. For a particular regression model with n parameters, an n+1 dimensional space existed defined by all the parameters plus the cost/loss function to minimize. The combination of parameters and loss function define a surface within the space. The regression model is fitted by moving down the steepest 'downhill' gradient until we reach the lowest point of the surface, where all possible gradients are 'uphill.' The final model is made up of the parameter estimates that define that location on the surface.

Throughout all iterations of the gradient descent algorithm for linear regression, one thing remains constant: The underlying data used to estimate the parameters and calculate the loss function never changes. In gradient boosting, however, the underlying data do change.

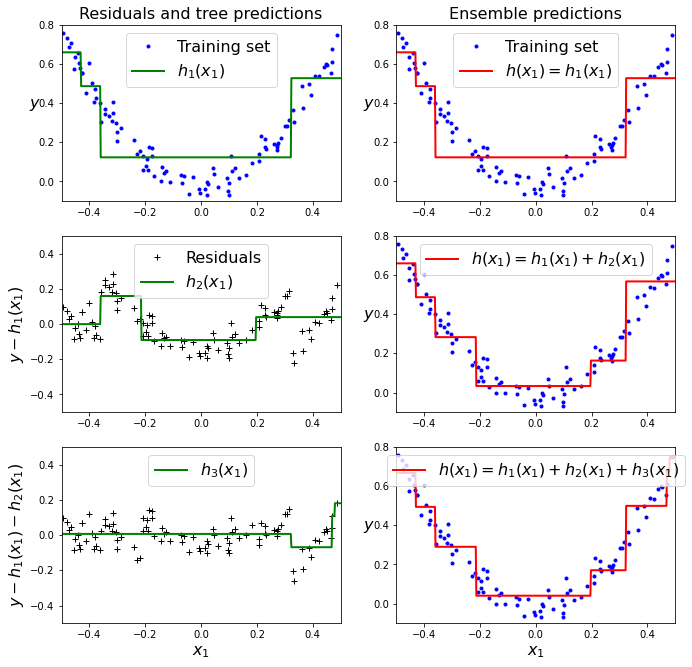

Each time we run a decision tree, we extract the residuals. Then we run a new decision tree, using those residuals as the outcome to be predicted. After reaching a stopping point, we add together the predicted values from all of the decision trees to create the final gradient boosted prediction.

Gradient boosting can work on any combination of loss function and model type, as long as we can calculate the derivatives of the loss function with respect to the model parameters. Most often, however, gradient boosting uses decision trees, and minimizes either the residual (regression trees) or the negative log-likelihood (classification trees).

Let’s go through a simple regression example using Decision Trees as the base predictors (of course Gradient Boosting also works great with regression tasks). This is called Gradient Tree Boosting, or Gradient Boosted Regression Trees. First, let’s fit a DecisionTreeRegressor to the training set.

Imports¶

import pandas as pd

import numpy as np

import scipy

import matplotlib.pyplot as plt

from sklearn import tree

from IPython.display import Image

%matplotlib inline

from sklearn import preprocessing

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.metrics import classification_report, confusion_matrix, roc_curve, auc

Set parametors¶

np.random.seed(42)

X = np.random.rand(100, 1) - 0.5

y = 3*X[:, 0]**2 + 0.05 * np.random.randn(100)

Instanciate and train regressors¶

from sklearn.tree import DecisionTreeRegressor

tree_reg1 = DecisionTreeRegressor(max_depth=2, random_state=42)

tree_reg1.fit(X, y)

Now train a second DecisionTreeRegressor on the residual errors made by the first predictor:

y2 = y - tree_reg1.predict(X)

tree_reg2 = DecisionTreeRegressor(max_depth=2, random_state=42)

tree_reg2.fit(X, y2)

Then we train a third regressor on the residual errors made by the second predictor:

y3 = y2 - tree_reg2.predict(X)

tree_reg3 = DecisionTreeRegressor(max_depth=2, random_state=42)

tree_reg3.fit(X, y3)

Ensemble the regressors and make a prediction¶

Now we have an ensemble containing three trees. It can make predictions on a new instance simply by adding up the predictions of all the trees:

X_new = np.array([[0.8]])

y_pred = sum(tree.predict(X_new) for tree in (tree_reg1, tree_reg2, tree_reg3))

y_pred

The figure below represents the predictions of these three trees in the left column, and the ensemble’s predictions in the right column. In the first row, the ensemble has just one tree, so its predictions are exactly the same as the first tree’s predictions. In the second row, a new tree is trained on the residual errors of the first tree. On the right you can see that the ensemble’s predictions are equal to the sum of the predictions of the first two trees. Similarly, in the third row another tree is trained on the residual errors of the second tree. You can see that the ensemble’s predictions gradually get better as trees are added to the ensemble.

Run the below cell to develop a visual representation.¶

def plot_predictions(regressors, X, y, axes, label=None, style="r-", data_style="b.", data_label=None):

x1 = np.linspace(axes[0], axes[1], 500)

y_pred = sum(regressor.predict(x1.reshape(-1, 1)) for regressor in regressors)

plt.plot(X[:, 0], y, data_style, label=data_label)

plt.plot(x1, y_pred, style, linewidth=2, label=label)

if label or data_label:

plt.legend(loc="upper center", fontsize=16)

plt.axis(axes)

plt.figure(figsize=(11,11))

plt.subplot(321)

plot_predictions([tree_reg1], X, y, axes=[-0.5, 0.5, -0.1, 0.8], label="$h_1(x_1)$", style="g-", data_label="Training set")

plt.ylabel("$y$", fontsize=16, rotation=0)

plt.title("Residuals and tree predictions", fontsize=16)

plt.subplot(322)

plot_predictions([tree_reg1], X, y, axes=[-0.5, 0.5, -0.1, 0.8], label="$h(x_1) = h_1(x_1)$", data_label="Training set")

plt.ylabel("$y$", fontsize=16, rotation=0)

plt.title("Ensemble predictions", fontsize=16)

plt.subplot(323)

plot_predictions([tree_reg2], X, y2, axes=[-0.5, 0.5, -0.5, 0.5], label="$h_2(x_1)$", style="g-", data_style="k+", data_label="Residuals")

plt.ylabel("$y - h_1(x_1)$", fontsize=16)

plt.subplot(324)

plot_predictions([tree_reg1, tree_reg2], X, y, axes=[-0.5, 0.5, -0.1, 0.8], label="$h(x_1) = h_1(x_1) + h_2(x_1)$")

plt.ylabel("$y$", fontsize=16, rotation=0)

plt.subplot(325)

plot_predictions([tree_reg3], X, y3, axes=[-0.5, 0.5, -0.5, 0.5], label="$h_3(x_1)$", style="g-", data_style="k+")

plt.ylabel("$y - h_1(x_1) - h_2(x_1)$", fontsize=16)

plt.xlabel("$x_1$", fontsize=16)

plt.subplot(326)

plot_predictions([tree_reg1, tree_reg2, tree_reg3], X, y, axes=[-0.5, 0.5, -0.1, 0.8], label="$h(x_1) = h_1(x_1) + h_2(x_1) + h_3(x_1)$")

plt.xlabel("$x_1$", fontsize=16)

plt.ylabel("$y$", fontsize=16, rotation=0)

#save_fig("gradient_boosting_plot")

plt.show()

Now that you have solid understanding of Gradient Boosting in the regression scenario, let's apply the same algorithm to a classification problem. Specifically, the Titanic dataset and predicting survival.

Use pandas read csv to load in the Titantic data set into a dataframe called df.¶

Hint: in this case you can use dropna() to just throw away any incomplete rows. For the purpose of this exercise we will disregard them but obviously in the real world you need to be much more careful and decide how to handle incomplete observations.

df = pd.read_csv('titanic.csv')

df.dropna(inplace=True)

df.head()

Print the levels of the categorical data using 'select_dtypes'. ¶

dfo = df.select_dtypes(include='object')

\

#get levels for all variables

vn = pd.DataFrame(dfo.nunique()).reset_index()

vn.columns = ['VarName', 'LevelsCount']

vn.sort_values(by='LevelsCount', ascending =False)

vn

Create dummy features for the categorical features and add those to the 'df' dataframe. Make sure to also remove the original categorical columns from the dataframe.¶

df = pd.DataFrame(df.drop(dfo.columns,axis =1)).merge(pd.get_dummies(dfo.drop(['Name','Cabin','Ticket'],axis =1)),left_index=True,right_index=True).drop(['PassengerId'],axis =1)

print(df.shape)

df.head()

Print the null values for each column in the dataframe.¶

#Counts of null values

na_df=pd.DataFrame(df.isnull().sum().sort_values(ascending=False)).reset_index()

na_df.columns = ['VarName', 'NullCount']

na_df[(na_df['NullCount']>0)]

Create the X and y matrices from the dataframe, where y = df.Survived ¶

X = df.drop(columns='Survived')

y = df.Survived

Apply the standard scaler to the X matrix.¶

scaler = preprocessing.StandardScaler().fit(X)

X_scaled = scaler.transform(X)

Split the X_scaled and y into 75/25 training and testing data subsets..¶

from sklearn.model_selection import train_test_split

X_train,X_test,y_train,y_test = train_test_split(X, y, test_size=0.25, random_state=42)

Run the cell below to test multiple learning rates in your gradient boosting classifier.¶

learning_rates = [0.05, 0.1, 0.25, 0.5, 0.75, 1]

for learning_rate in learning_rates:

gb = GradientBoostingClassifier(n_estimators=20, learning_rate = learning_rate, max_features=2, max_depth = 2, random_state = 0)

gb.fit(X_train, y_train)

print("Learning rate: ", learning_rate)

print("Accuracy score (training): {0:.3f}".format(gb.score(X_train, y_train)))

print("Accuracy score (validation): {0:.3f}".format(gb.score(X_test, y_test)))

print()

Apply the best learning rate to the model fit and predict on the testing set. Print out the confusion matrix and the classification report to review the model performance.¶

import itertools

def plot_confusion_matrix(cm, classes,

normalize=False,

title='Confusion matrix',

cmap=plt.cm.Blues):

"""

This function prints and plots the confusion matrix.

Normalization can be applied by setting `normalize=True`.

"""

if normalize:

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

print("Normalized confusion matrix")

else:

print('Confusion matrix, without normalization')

# print(cm)

plt.imshow(cm, interpolation='nearest', cmap=cmap)

plt.title(title)

plt.colorbar()

tick_marks = np.arange(len(classes))

plt.xticks(tick_marks, classes, rotation=45)

plt.yticks(tick_marks, classes)

fmt = '.2f' if normalize else 'd'

thresh = cm.max() / 2.

for i, j in itertools.product(range(cm.shape[0]), range(cm.shape[1])):

plt.text(j, i, format(cm[i, j], fmt),

horizontalalignment="center",

color="white" if cm[i, j] > thresh else "black")

plt.ylabel('True label')

plt.xlabel('Predicted label')

plt.tight_layout()

gb = GradientBoostingClassifier(n_estimators=20, learning_rate = 1, max_features=2, max_depth = 2, random_state = 0).fit(X_train, y_train)

y_pred = gb.predict(X_test)

print(classification_report(y_test,y_pred))

# Plot non-normalized confusion matrix

cnf_matrix = confusion_matrix(y_test,y_pred)

class_names = ['Survived','Not Survived']

plot_confusion_matrix(cnf_matrix, classes=class_names,

title='The Titanic dataset and predicting survival')

Calculate the ROC for the model as well.¶

# Import necessary modules

# from sklearn.metrics import roc_curve

# Compute predicted probabilities: y_pred_prob

y_pred_prob = gb.predict_proba(X_test)

# Generate ROC curve values: fpr, tpr, thresholds

fpr,tpr,thresholds = roc_curve(y_test, y_pred_prob[:,1])

# Plot ROC curve

plt.plot([0, 1], [0, 1], 'k--')

plt.plot(fpr,tpr)

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('ROC Curve')

plt.show()